[Work Log] Debugging ML Gradient (part 2)

Resuming debugging of analytical gradient.

I noted yesterday that the error we observed in K' was small -- on the order of 1e-5 -- implying that it wasn't sufficient to explain the end-to-end error we're seeing. But we should put that into context. The delta from the numerical gradient is 1e-7, so the error in K' is around 1%, i.e. in the noticible range.

Also remember that running analytical grdient using the K' from the numeric algorithm showed no noticible change to resutls. So K' probably isn't the issue.

Today's strategy: implement a legitimate test-bed that we can run for several deltas and get all intermediate values for both numeric and analytical gradient.

Implemented, examining results.

Okay, this is weird. All of the partial derivatives have low error (1e-5), but the final derivative of g(x) is huge (0.1).

Consider the analytical expression we're using for the gradient of g(x)

g' = -0.5 * y^\top * S^\top * V' * S * y

Plugging-in the numerical estimate for V' above should give something close to the numerical estimate for g'. But they're nowhere close.

>> -0.5 * y' * S' * dV_hat * S * y

ans =

-0.1015

>> g_hat

ans =

0.0522

Stated differently, if we propagate the error in dV_hat through the expression for g', this theoretical error in g' is far smaller than the true error in the analytical expression for g'.

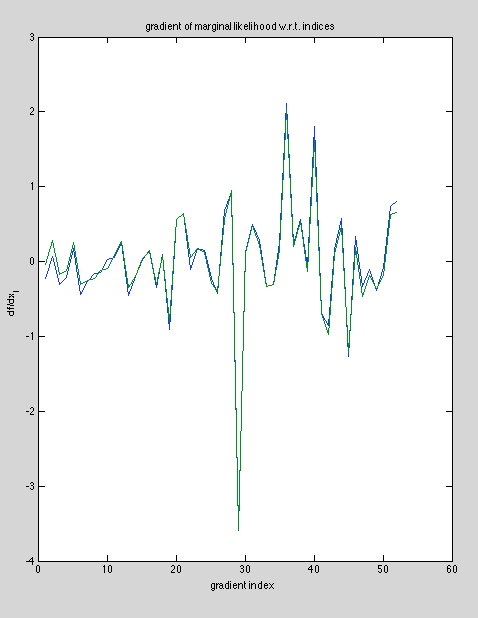

This strongly suggests there's a problem in our expression for g', that the error is not due to approximation or precision issues. But if g' is so wrong, why are so many elements of the gradient so close to being correct? Recall the plot of gradients from yesterday, shown below.

Element 22 (the element under scrutiny in the tests above) has large error, but several other elements are nearly perfect. This suggests that if there is a problem in our expression.

Is it possible there's a bug in the test logic? Maybe we're using the wrong field for y or S? Or maybe when we perturb x, we aren't updating all the intermediate fields?

Got it! We're neglecting the change in the normalizing "constant", Z. In the usual scenario, Z is constant w.r.t. the Gaussian distribution input y, but it is most certainly a function of the values of the Sigma matrix.

Posted by Kyle Simek